There are many benefits in moving artificial intelligence from the cloud to the local device – these include improvements to privacy, security, speed and reliability. However, device local AI (Edge AI and Endpoint AI) has only become possible thanks to improvements in both the computing abilities of embedded devices and improvements in the field of artificial intelligence.

In this post we’ll look at the steps in enabling hardware accelerated image classification on an i.MX8 based Coral Dev Board. This single board computer includes a Edge TPU coprocessor – a small ASIC built by Google that’s specially-designed to execute state-of-the-art neural networks at high speed and low power. We’ve used this to create a Linux ‘Tux mascot detector’ to detect if an object placed in front of the camera is the Tux mascot or something else.

We’ve chosen to use TensorFlow – one of the most widely used open-source packages available for machine learning and artificial intelligence. Specifically we’ll be using TensorFlow Lite – a lighter weight version of TensorFlow intended for use on mobile, embedded and constrained devices.

In order to perform image classification, we need a Machine Learning (ML) model. We could create and train one from scratch, however this requires a good understanding of Machine Learning, a very large data set and lots of computing power. Fortunately, visual recognition is a common problem with pre-existing solutions, thus we can leverage a pre-existing and pre-trained model instead. We’ve chosen to use Google’s MobileNet v2 model (read more here) which has been trained against millions of images and thousands of object classes via the ImageNet dataset. This dataset doesn’t know anything about Tux, however we can use ‘transfer learning‘ to re-train it for our purpose. Due to the construction of neural networks, it’s possible to retrain just the top layers (normally representing the classifier) and leave the lower layers as they are (which are the ones associated with feature detection and generically understanding the visual world). This allows us to re-train the model with our very small data set to get accurate results and without overfitting.

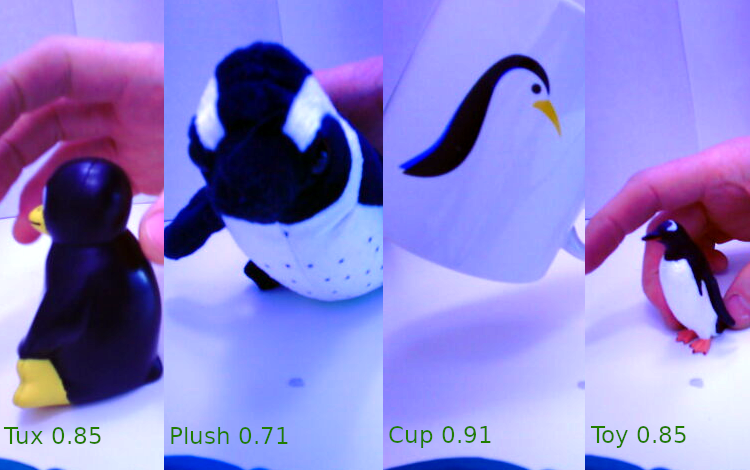

In order to re-train our model we used the captured videos of the Tux mascot and a variety of penguin related objects – such as a cup with a penguin on, a penguin figurine and soft toy. We captured a video of each object using GStreamer and a camera connected to the Coral Dev board, as follows:

$ gst-launch-1.0 v4l2src ! video/x-raw,width=640,height=480 ! autovideoconvert ! jpegenc ! avimux ! filesink location=/tmp/cup.aviWe then transferred these to a PC where we used ffmpeg to extract a series of still images (see cover photo) – we then manually removed images that weren’t representative – for example images where the object was out of view. As follows:

$ ffmpeg -i cup.avi -vcodec copy data/cup/cup%d.jpgFinally, it’s time to re-train our model. To do this we used TensorFlow Lite Model Maker via the following Python script:

import tensorflow as tf

assert tf.version.startswith('2')

from tflite_model_maker import model_spec

from tflite_model_maker import image_classifier

from tflite_model_maker.config import ExportFormat

from tflite_model_maker.config import QuantizationConfig

from tflite_model_maker.image_classifier import DataLoader

data = DataLoader.from_folder("./data")

train_data, test_data = data.split(0.9)

model = image_classifier.create(train_data, model_spec="mobilenet_v2")

loss, accuracy = model.evaluate(test_data)

config = QuantizationConfig.for_int8(train_data)

model.export(export_dir='.', quantization_config=config, export_format=[ExportFormat.LABEL]) This script will use use transfer learning to re-train a model based on our collection of images (which we’ve organised in a directory named data with subdirectories containing images of each object type). The output of this is the trained model (model.tflite) and output labels (labels.txt). The above script splits the provided data set into training data and test data, re-trains a MobileNet v2 model using our data and evaluates it against the test data. We then perform post-training quantisation – this converts floating point values (such as weights and activation outputs) to integers. Using lower precision values helps reduce the model size and improves latency – furthermore, it’s a requirement that models are quantized with 8-bit fixed values in order to take full advantage of the Edge TPU.

As we wish to use the model on the Coral dev board – we need to use the Edge TPU compiler to convert the model so that it’s compatible for use with the Edge TPU. We performed this on the host PC as follows:

$ edgetpu_compiler -s model.tflite

Edge TPU Compiler version 16.0.384591198

Started a compilation timeout timer of 180 seconds.

Model compiled successfully in 684 ms.

Input model: model.tflite

Input size: 2.65MiB

Output model: model_edgetpu.tflite

Output size: 2.79MiB

On-chip memory used for caching model parameters: 2.71MiB

On-chip memory remaining for caching model parameters: 4.98MiB

Off-chip memory used for streaming uncached model parameters: 64.00B

Number of Edge TPU subgraphs: 1

Total number of operations: 70

Operation log: model_edgetpu.log

Operator Count Status

RESHAPE 1 Mapped to Edge TPU

SUB 1 Mapped to Edge TPU

FULLY_CONNECTED 1 Mapped to Edge TPU

AVERAGE_POOL_2D 1 Mapped to Edge TPU

DEPTHWISE_CONV_2D 17 Mapped to Edge TPU

QUANTIZE 2 Mapped to Edge TPU

MUL 1 Mapped to Edge TPU

CONV_2D 35 Mapped to Edge TPU

SOFTMAX 1 Mapped to Edge TPU

ADD 10 Mapped to Edge TPU

Compilation child process completed within timeout period.

Compilation succeeded!This converts the Tensorflow Lite model (model.tflite) into an Edge TPU compatible model (model_edgetpu.tflite file) allowing for operations to be delegated to the TPU.

Now that we have a suitable model, we’re ready to use it on the Coral Dev Board. Coral’s recommended Linux distribution is Mendel Liunx – a lightweight derivative of Debian distribution that fully supports the Coral hardware. However we decided to use Yocto (Warrior) with the meta-coral and meta-tensorflow-lite Yocto layers instead.

In order to utilise the TPU we need to integrate the runtime libedgetpu library. However care must be taken to ensure that the version of Tensorflow Lite that the libedgetpu was built against matches the version used to run the model. Therefore we decided to rebuild this library to match our version of Tensorflow Lite. This is reasonably straight forward, thanks to Coral’s notes – first we checked out the sources for libedgetpu.

$ git clone https://github.com/google-coral/libedgetpu # HEAD:ea1eaddbddece0c9ca1166e868f8fd03f4a3199eWe then modified the workspace.bzl to reflect the version of Tensorflow Lite that we are using, as shown below:

diff --git a/workspace.bzl b/workspace.bzl

index 8494c0ff92f9..998f0c55a581 100644

--- a/workspace.bzl

+++ b/workspace.bzl

@@ -6,8 +6,8 @@ load("@bazel_tools//tools/build_defs/repo:http.bzl", "http_archive")

load("@bazel_tools//tools/build_defs/repo:utils.bzl", "maybe")

# TF release 2.5.0 as of 05/17/2021.

-TENSORFLOW_COMMIT = "a4dfb8d1a71385bd6d122e4f27f86dcebb96712d"

-TENSORFLOW_SHA256 = "cb99f136dc5c89143669888a44bfdd134c086e1e2d9e36278c1eb0f03fe62d76"

+TENSORFLOW_COMMIT = "919f693420e35d00c8d0a42100837ae3718f7927"

+TENSORFLOW_SHA256 = "70a865814b9d773024126a6ce6fea68fefe907b7ae6f9ac7e656613de93abf87"

CORAL_CROSSTOOL_COMMIT = "6bcc2261d9fc60dff386b557428d98917f0af491"

CORAL_CROSSTOOL_SHA256 = "38cb4da13009d07ebc2fed4a9d055b0f914191b344dd2d1ca5803096343958b4"We then rebuilt libedgetpu.so as follows:

$ DOCKER_CPUS="k8" DOCKER_IMAGE="ubuntu:18.04" DOCKER_TARGETS=libedgetpu make docker-build

$ DOCKER_CPUS="armv7a aarch64" DOCKER_IMAGE="debian:stretch" DOCKER_TARGETS=libedgetpu make docker-buildFinally we can obtain the generated libedgetpu.so.1.0 from out/throttled/aarch64/ and include it in our Yocto build.

Please note that the Edge TPU compiler will generate models for the latest runtime – thus if you aren’t using the latest version of the runtime (libedgetpu) then you can use the ‘–min_runtime_version‘ argument when invoking the compiler to specify the version required (to match the value of kCurrent in libedgetpu’s api/runtime_version.h file).

The next step was creating an application that makes use of our model – our application is written in C++ and uses OpenCV to capture video frames from the camera and makes use of Tensorflow lite to perform image classification.

Whilst our application is very similar to most of the example Tensorflow Lite applications (e.g. here and here), let’s take a look at how we tell Tensorflow Lite about out hardware accelerator (without error handling for clarity):

auto model = tflite::FlatBufferModel::BuildFromFile(model_file.c_str());

std::shared_ptr edgetpu_context = edgetpu::EdgeTpuManager::GetSingleton()->OpenDevice();

tflite::ops::builtin::BuiltinOpResolver resolver;

resolver.AddCustom(edgetpu::kCustomOp, edgetpu::RegisterCustomOp());

std::unique_ptr interpreter;

tflite::InterpreterBuilder(*model, resolver)(&interpreter);

interpreter->SetExternalContext(kTfLiteEdgeTpuContext, edgetpu_context.get());

interpreter->SetNumThreads(1);

interpreter->AllocateTensors();We now have all the pieces we need and can finally perform fast hardware accelerated image classification on a Coral Dev board. All that’s left is to provide a video frame as an input Tensor to interpreter, run Invoke to perform an inference and obtain the results by reading the output Tensor.

We created a video to demonstrate our Tux detector (as well as to demonstrate how we can optimise its boot time to 2 seconds) – look out for this in the coming weeks on our YouTube channel.

There are many ways to utilise Coral’s Edge TPU – we used an old version of Yocto, manually rebuilt the libedgetpu library and used the Tensorflow Lite C++ APIs. However there are many other means, for example using Mendel Linux, using Python or even using Coral’s libcoral C++ API. There is plenty more information here.

May 2022 Update – In order to demonstrate our capabilities in boot time optimisation we built upon the demo in this post and made it boot in 2 seconds – find out more here.